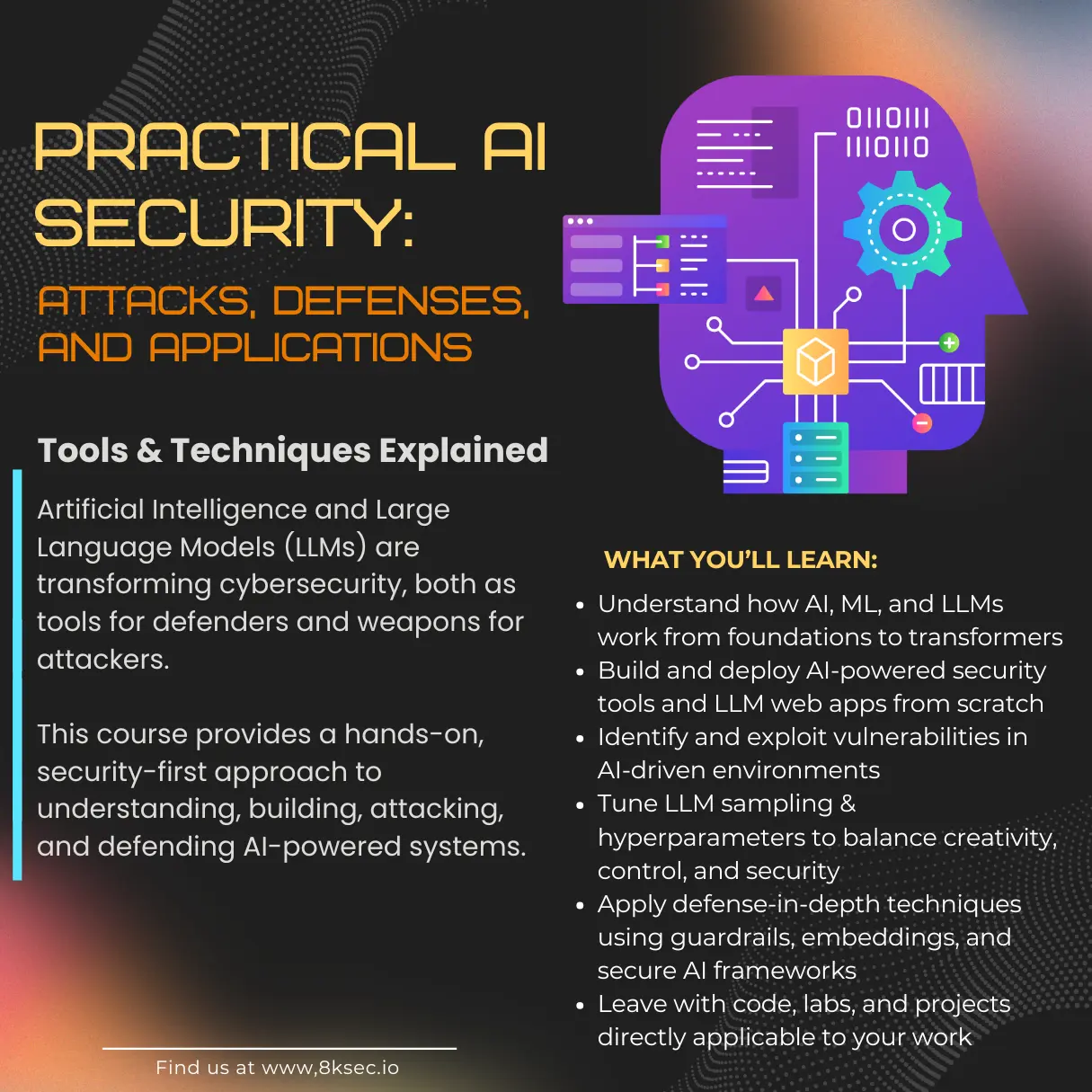

Practical AI Security: Attacks, Defenses, and Applications

Live On-Site / Live Virtual / On-Demand

Learn Offensive And Defensive AI Security Strategies

Elevate your expertise in AI security. This comprehensive course covers LLM fundamentals, prompt engineering, AI agents, MCP servers, prompt injection attacks, and defensive strategies.

What You Will Learn

The AI security landscape is evolving rapidly, and this course places you at the forefront. You will gain a thorough understanding of the transformer architecture that powers modern Large Language Models, explore how these models are integrated into applications through RAG pipelines and agent frameworks, and learn the security implications at every layer of the stack.

On the offensive side, you will master prompt injection attacks, jailbreaking techniques, MCP server exploitation, and AI agent manipulation. The course walks through real-world attack scenarios including data exfiltration through tool-use, supply chain poisoning, and multi-turn escalation. On the defensive side, you will implement AI security frameworks such as Google SAIF and OWASP LLM Top 10, build AI gateways, configure guardrails, and apply STRIDE threat modeling to AI systems.

Every module is paired with hands-on labs where you attack and defend real AI systems. By the end of the course, you will have the practical skills to perform comprehensive security assessments of LLM-powered applications and deploy effective defenses in production environments.

Key Objectives

- ✓Understand transformer architecture and how LLMs process and generate text

- ✓Build and secure RAG pipelines using LangChain and LlamaIndex

- ✓Master prompt engineering techniques for both attack and defense

- ✓Exploit and defend MCP (Model Context Protocol) servers

- ✓Assess security of AI agents and multi-agent systems

- ✓Apply Google SAIF and OWASP LLM Top 10 frameworks

- ✓Perform prompt injection attacks including direct, indirect, and multi-turn

- ✓Identify and exploit vulnerabilities in AI-generated applications

- ✓Apply STRIDE threat modeling to AI systems

- ✓Implement AI gateways and guardrails for production deployments

- ✓Assess supply chain integrity of ML models and dependencies

- ✓Use Fabric AI and other automation tools for security workflows

All our live trainings are highly customizable. We can tailor the content to cover topics specific to your team's needs. Contact us for more details.

Syllabus

Module 1: Foundations of AI and LLMs +

- •Transformer architecture and self-attention mechanisms

- •Tokenization, embeddings, and positional encoding

- •Pre-training, fine-tuning, and RLHF

- •LLM attack surface analysis

- •Security implications of the architecture

Module 2: Building with LLMs +

- •RAG (Retrieval-Augmented Generation) pipelines

- •LangChain architecture and components

- •LlamaIndex for knowledge retrieval

- •Advanced prompt engineering techniques

- •Vector databases and embedding security

Module 3: MCP Servers & AI Agents +

- •Model Context Protocol architecture

- •Building MCP servers and clients

- •AI agent workflows and tool usage

- •Autonomous agent patterns (ReAct, Chain-of-Thought)

- •Multi-agent system design

Module 4: AI Security Frameworks +

- •Google Secure AI Framework (SAIF) deep dive

- •OWASP LLM Top 10 overview and application

- •STRIDE threat modeling for AI systems

- •Building an AI security assessment methodology

- •Risk prioritization and mitigation planning

Module 5: Prompt Injection Attacks +

- •Direct prompt injection techniques

- •Indirect injection via documents and tools

- •Multimodal prompt injection attacks

- •Jailbreaking techniques and bypasses

- •Prompt injection defenses and detection

Module 6: AI Application Vulnerabilities +

- •Vulnerabilities in AI-generated code

- •Package hallucination and dependency confusion

- •Supply chain risks for AI applications

- •Data poisoning and model integrity

- •Secure development practices with AI tools

Module 7: MCP Exploitation & Agent Security +

- •MCP server attack techniques

- •Tool poisoning and data exfiltration

- •Agent hijacking and privilege escalation

- •Supply chain attacks on MCP registries

- •Hardening MCP deployments

Module 8: Defensive AI & Gateways +

- •AI gateway architecture and deployment

- •Input validation and output filtering guardrails

- •Fabric AI for security automation

- •Supply chain integrity for ML models

- •Secure AI deployment and monitoring

Prerequisites

To successfully participate in this course, attendees should possess the following:

- • Basic understanding of cybersecurity concepts

- • Familiarity with programming (Python preferred)

- • Basic knowledge of machine learning concepts helpful but not required

- • Interest in AI/LLM security

Duration

3 Days

Ways To Learn

- On Demand

- Live Virtual

- Live On-Site

Who Should Attend?

Security professionals, AI/ML engineers, software developers building AI applications, and anyone looking to understand AI security threats and defenses.

Laptop Requirements

- • Laptop with 8+ GB RAM and 40 GB hard disk space

- • Cloud lab instances provided

- • Administrative access on the system

- • Setup instructions sent before the course

Trusted Training Providers

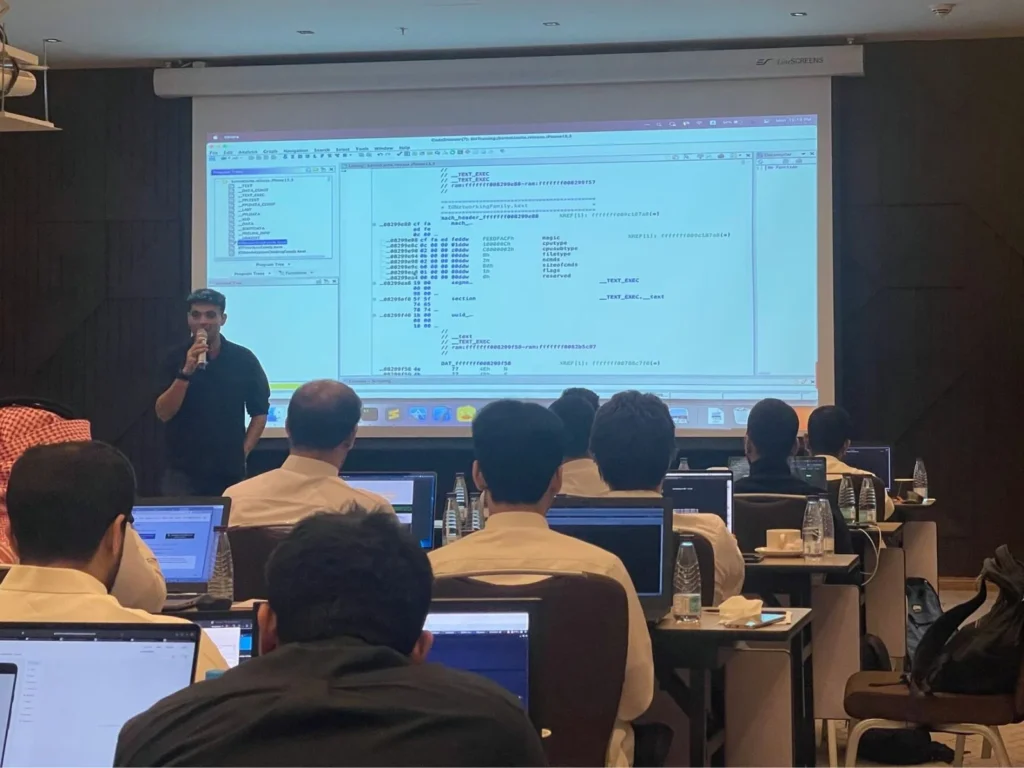

Our trainers boast more than ten years of experience delivering diverse training sessions at conferences such as Blackhat, HITB, Power of Community, Zer0con, OWASP Appsec, and more.

Take Your Skills To The Next Level

Our Modes Of Training

On Demand

Learn at your own pace

Perfect for Self-Paced Learners

- Immediate access to materials

- Lecture recordings and self-assessments

- 365 days of access

- Certificate of completion

- Dedicated email support

Live Virtual

Get in touch for pricing

Perfect for Teams in Multiple Locations

- Real-time interaction with expert trainers via Zoom

- Customizable content for your team

- Continued support after training

- Hands-on labs with cloud environments

Live On-Site

Get in touch for pricing

Perfect for Teams in One Location

- Real-time interaction at your onsite location

- Customizable content for your team

- Continued support after training

- Hands-on labs with cloud environments

FAQ

The information on this page is subject to change without notice.

Contact Us

Have a question or want to learn more about this training? Get in touch with us.

Our Location

51 Pleasant St # 843, Malden, MA, US, 02148

General Inquiries

contact@8ksec.io

Trainings

training@8ksec.io